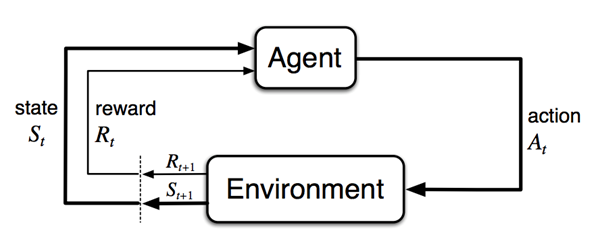

Figure 1. Reinforcement Learning agent acting in an unknown environment (Image source: Reinforcement Learning - An Introduction by Sutton and Barto)

Deep Learning is a branch of AI that uses artificial neural networks (ANNs) to tackle complex problems. It has been applied with success across a variety of disciplines, often realizing state-of-the-art results. Although ANNs were proposed decades ago, their recent, effective use has only come in recent years, due primarily to substantial increases in computing power and the size of datasets available for training and refining ANN models. Deep Learning has proven particularly useful for automatically extracting structure and meaning from relatively raw data, which has allowed AI pioneers to successfully take on projects without the deep human domain knowledge previously necessary for extracting value from raw data like images, text, and video. New analytic innovations need no longer be bottlenecked by the limited availability of rare, specialist domain experts, and instead can be developed by generalist data scientists, and even by AI-savvy software developers. While subject matter expertise is and will remain useful, AI complements such experience by providing data-driven and previously undiscovered representations of the features of data that matter for analytic tasks, representations from outside the well-worn conceptual traditions of subject matter experts.

Building on the strengths of ANNs, Deep Reinforcement Learning has likewise made the transition from theory to practice, has shown extraordinary results in solving complex problems. Notably, Deep Reinforcement Learning methods were used by DeepMind to create the first AI agent able to defeat the world champion in the game Go. Given the complexity of the game, this feat had been thought to be almost impossible.

Reinforcement Learning (RL) is an especially exciting area of AI. In the RL approach, an AI agent observes states in its environment, and takes actions so as to maximize rewards at some point in the future (see Figure 1). Unlike in supervised learning, the agent is not given any direction or indication as to what the optimal actions are, but must instead learn from the data of experience which situations lead to maximum reward. Just as ANNs can use the data to discover representations of the data that go beyond the concepts and theories of subject matter experts to help with classification and prediction, RL uses the data to learn sequences of actions to maximize desired outcomes, action sequences that may be dramatically different from the actions human experts would recommend. The key to the value of RL is in the sequence of interrelated actions: Effective actions are frequently not isolated decisions, but instead have a compounding effect on future observed states, which in turn affect the value of what future actions to take. The end goal is for the agent to learn some optimal policy, or decision strategy, by which it can map any state it comes across to an action leading to maximum future rewards. By combining Deep Learning and Reinforcement Learning it is possible to generalize across large action and state spaces.

Tackling Real World Problems

The applicability of RL in the enterprise is vast and largely untapped. To date, most Deep Reinforcement Learning successes have focused on its application to games and robotics. In such cases, emulators and simulators are readily available and present the perfect environment in which to run trials without risk. By contrast, many of the problems that companies wish to solve do not come with a risk-free testing environment: It can be difficult and sometimes impossible to allow an AI agent to freely and rapidly explore the impact of its potential actions through trial and error.

But the availability of a simulator is not essential to effectively applying RL techniques in enterprise settings. With the right methodology and right platform for the management and computational processing of vast amounts of historical data, it is possible to train the agent and prepare it for deployment in the real world. Methodologies exist to take risk appropriately into account without impacting an agent’s ability to learn a policy that outperforms the status quo to deliver business results. One attractive methodology is known as off-policy learning. Off-policy techniques are designed to specifically allow agents to learn a desired target policy while also learning from a behavior policy.

Take airline ticket pricing as an example. In this example, the actions taken by the agent – an AI agent, not a human ticketing agent! -- are defined as the price at which a ticket is sold to an individual customer. The states the agent observes are features of the customer such as demographics and previous buying behavior, current inventory of seats and a host of other features representing network-wide information. The model is trained to maximize the total revenue for a particular flight. We cannot simply drop our agent into a ticketing sales system and allow it to learn by altering ticket prices and wreaking havoc on an airline’s profit until it eventually discovers a decent policy. Instead, we ask our agent to learn a pricing policy that maximizes future profits by first providing it with another behavior policy derived from historical data on prior customer interactions and sales, among other things. Indeed, the more relevant data that can be assembled, the better the behavior policy. After the agent learns such a policy, the risk of introducing it into the ticket sales system is greatly reduced. The initial policy learnt can then be refined in the environment through A/B testing that limits enterprise risk and maximizes business results.

Leveraging Deep Q Networks

Figure 2. ANN approximating Q-values

Deep Q Networks (DQN) is a standard off-policy Reinforcement Learning algorithm. DQN is used to learn an action-value function. The action-value function (also known as the Q-function) attempts to accurately approximate the expected return given a state and action (Q-values). The return is defined as the total sum of rewards moving forward from a state and action. Given an optimal action-value function, a policy can easily be derived by simply selecting the action with the largest Q-value for a certain state. In real world problems, the state and action space are large and it becomes infeasible to memorize state-action pairs in a table. So instead of explicitly memorizing pairs, we can utilize ANNs in order to approximate Q-values, which how we use RL and ANNs together to create a powerful solution.

In our airline example, the Q-function would estimate the expected future profits for a given individual customer and an offered ticket price. Here the expected future profits include future ticket purchases by that individual.

Because it is off-policy, DQN allows us to apply Deep Reinforcement Learning to problems in which we cannot simulate actions. However, the difficulty with using DQN is the algorithm’s assumption that each and every state-action pair has been visited countless times. To overcome this, we consider two approaches. In the first approach we limit the size of the state and action space which will force the model to generalize at a granularity at which coverage is supported by the historical data. While this technique will work, the less granular our state and action space, the more likely we are to lose valuable information resulting in suboptimal policies. This leads to the second approach in which we do not significantly limit the state-action space but rather drive down the Q-values for state-action pairs that have not been visited in the historical data. By doing this, we prevent overestimating the expected future rewards of unseen state-action pairs. This would hopefully result in an agent that stays close to what it has seen while still improving on the performance of the behavior policy.